Deep Neural Network (DNN) is a type of machine learning model that closely resembles a human brain. Like the brain, DNN consists of nodes and connections to process the outside information and learn a specific task. However, such networks have a long way to go until they can understand or process like a human brain. One major issue that has been identified is that, unlike the brain, DNN relies on large amounts of data and computing resources to abstract meaningful information.

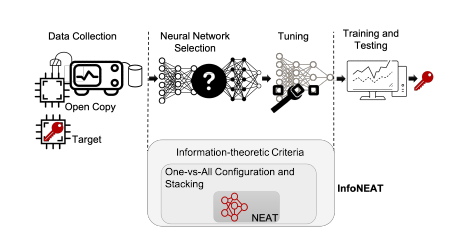

To tackle this issue, a team comprised of FINS Associate Director, Dr. Domenic Forte, his Research Assistant and PhD student, Rabin Acharya, and a former UF post-doctoral associate who is now an Assistant Professor at Worcestor Polytechnical Institute (WPI), Dr. Fatemah Ganji devised an approach that allows the DNN to automatically evolve its architecture until it successfully learns to solve the problem. To arrive at this solution, the team first tried to understand how much new information they could gain with data, then used these findings to develop compact and robust DNNs that can quickly assess the security vulnerability of critical hardware devices. The approach, which they entitled InfoNeat, is said to function “similar to how human brains evolve over time and requires few resources to perform simple tasks such as an object or facial recognition”.

Figure 1: Overview of the proposed “InfoNEAT” which extends the NEAT algorithm to meet SCA requirements. Compared to conventional ML-enabled SCA, network selection and hyperparameter tuning are handled automatically.

“Most DL approaches still rely on trial and error… you try an architecture and provide it with training data. Whereas our approach automatically optimizes both the architecture and the model for a classification task that not only utilizes fewer resources, but we found to be more generalizable than the trial and error method.” Dr. Forte

showcase the capabilities of the evolved DNNs, they used them to assess the security of critical hardware devices through the so-called side-channel analysis (SCA). SCA involves the analysis of, e.g., power consumption and electromagnetic emissions from hardware devices to extract sensitive information such as secret cryptographic keys (e.g., users’ passwords). In recent years, DNNs have been quite effective for this purpose. However, the design of these DNNs is a tedious process, and the resultant network is quite bulky. In contrast to usual DNNs used for SCA, InfoNEAT automatically trains compact models that are up to six times smaller and requires up to two times fewer computing resources compared to traditional DNNs. Moreover, their results have shown that this model can be used to successfully assess previously unseen and far more complicated datasets.

The team will be introducing InfoNeat and presenting their finding at the next Conference on Cryptographic Hardware & Embedded Systems (CHES), in Prague, Czech Republic on September 2023.

CHES, which is regarded as one of the top venues for research in hardware security and the top venue for side-channel analysis and solutions, is a unique event among its ilk in that it is not only an international conference, but a journal (TCHES) as well as well.

Disclaimer: This work was in part supported by AFOSR, AFRL and NSF.